Chapter 6 Applications of Ridge and Lasso

6.1 Cross Validation

One of the key features of a good model is prediction accuracy. If our prediction varies a lot when we use the model on other datasets, then our model is bad at making prediction, and our model is overfitting to that the training dataset. Therefore, we also want to estimate the prediction accuracy of our models to decide whether it is good at making predictions; and one way of estimation prediction accuracy is cross validation.

(from Hands-On Machine Learning with R 2.4 by Bradley Boehmke & Brandon Greenwell)

Cross validation randomly divides the data set into k folds, k-1 training groups to which models will be fitted and 1 testing group to test the model. For example, in the image above, the dataset is divided into 5 groups, the blue ones as the training groups and the pink one as the test group. The process is repeated 5 times and averaging the RMSE for each time gives the cross-validation RMSE of the model. In our simulation, k=10 will be used to minimize the variability and imporve the accuracy of the models.

6.2 Dataset

This dataset is obtained from https://raw.githubusercontent.com/juliasilge/supervised-ML-case-studies-course/master/data/cars2018.csv. The goal is to find how different features of a car affect its miles per gallon (MPG). The outcome variable is numerical while the predictor variables are a mix of numerical and categorical variables. The dataset is splitted into training data with 70% of the data and test data with 30%. In this case, we would use the training data to fit our linear model and test its prediction accuracy with the remaining data.

6.3 OLS

We first fit an OLS model to the training data. The result shows that the coefficient for Gears is 0.1338 and the coefficient for Cylinders is 0.5918. The RMSE for this model is 3.0272.

6.4 RIDGE

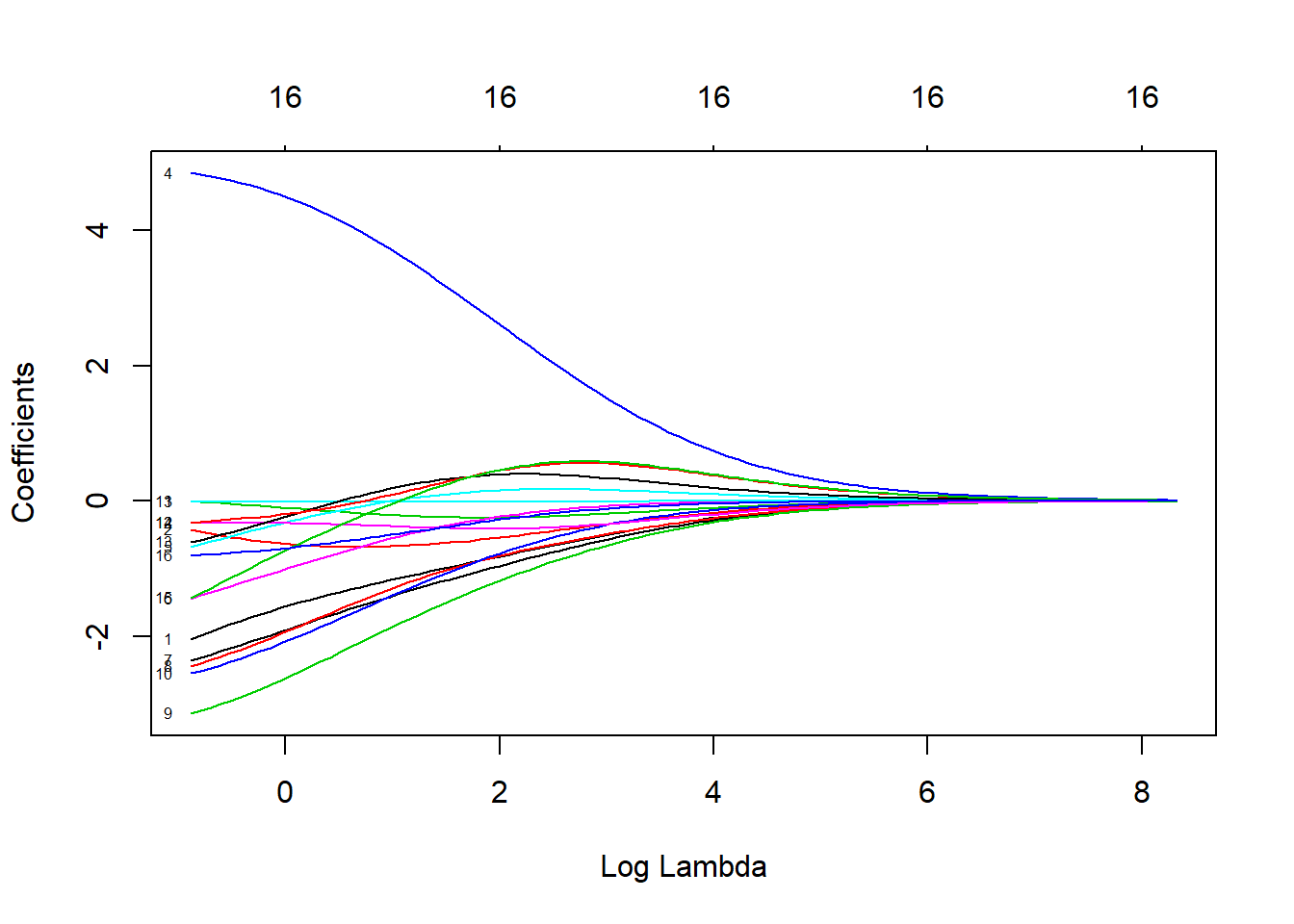

Secondly, we fit a ridge model to the training data. We try with a range of lambda values and use the bestTune$lambda function to find the lambda value that minimizes the RMSE. Then we plug in this lambda value and make a new ridge model. We get a RMSE of 3.0743, which is slightly higher than the OLS RMSE. The coefficient for Cylinders is shrunk to -0.4284 and the one for Gear is shrunk to -0.0064, but none of the coefficients is set to 0 though some of them are close to 0. The graph below shows the shrinkage of coefficients. We can see the as lambda increases, all of them get close to 0 but not equal to 0.

set.seed(455)

cars_ridge <- train(

MPG ~ .,

data = cars_train,

method = "glmnet",

trControl = trainControl(method = "cv",

number = 10),

tuneGrid = data.frame(alpha = 0,

lambda = 10^seq(-3,0, length = 100)),

na.action = na.omit

)

cars_ridge$bestTune$lambda

6.5 LASSO

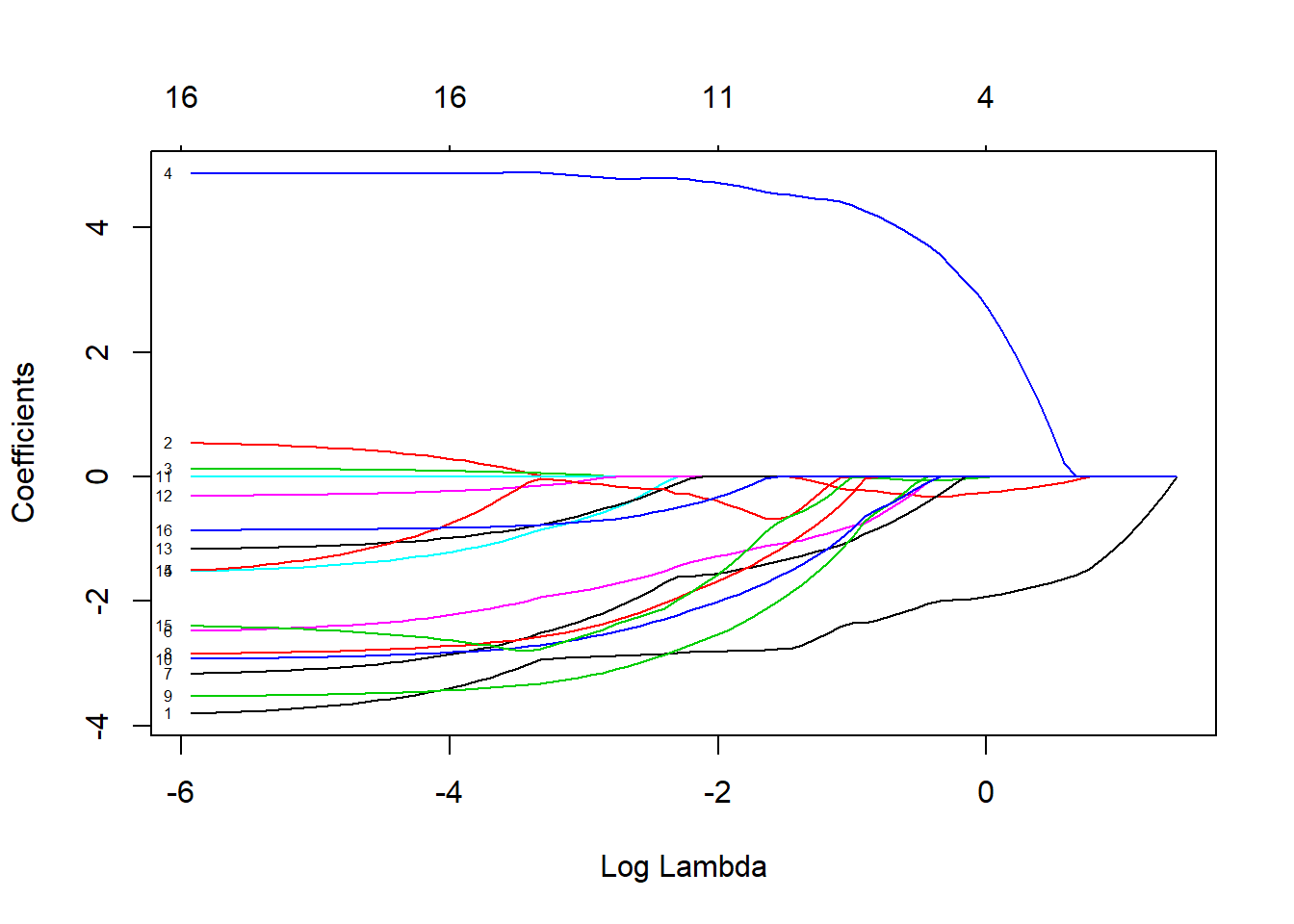

We repeat the same process to fit the LASSO model. In terms of coding, the only difference is to change the alpha value in the tuneGrid from 0 to 1. We get a RMSE of 3.0255, which is the smallest. Some coefficients such as Cylinders and Gears are set to 0. It is interesting that the coefficient TransmissionManual is set to 0 while TransmissionCVT, the other term of the same categorical variable, is not. This leads to one of the limitation of LASSO models when analyzing categorical variables. From the graph showing the shrinkage of coefficients, we can see the as lambda increases, all of them get to exactly 0.

set.seed(455)

cars_lasso <- train(

MPG ~ .,

data = cars_train,

method = "glmnet",

trControl = trainControl(method = "cv",

number = 10),

tuneGrid = data.frame(alpha = 1,

lambda = 10^seq(-3, -2, length = 100)),

na.action = na.omit

)

cars_lasso$bestTune$lambda

## 17 x 1 sparse Matrix of class "dgCMatrix"

## 1

## (Intercept) 39.75545077

## Displacement -2.83657321

## Cylinders .

## Gears .

## TransmissionCVT 4.79076756

## TransmissionManual .

## AspirationTurbocharged/Supercharged -1.44680028

## `Lockup Torque Converter`Y -1.61692083

## Drive2-Wheel Drive, Rear -1.94168304

## Drive4-Wheel Drive -2.78768269

## DriveAll Wheel Drive -2.22100559

## `Max Ethanol` -0.00222014

## `Recommended Fuel`Premium Unleaded Required .

## `Recommended Fuel`Regular Unleaded Recommended -0.10788122

## `Intake Valves Per Cyl` -0.27837487

## `Exhaust Valves Per Cyl` -1.97358320

## `Fuel injection`Multipoint/sequential ignition -0.498521926.6 Test data

We also apply the models to the test data to obtain the test RMSEs.

6.7 Summary

From the summary table above, we can see that for this dataset, Lasso always gives the smallest RMSE no matter for the training group or the testing group. Also, according to the graphs of shrinking coefficients, we can see that some coefficients can reach zero for Lasso regression; while in the graph for ridge regression, all coefficients decrease towards zero but they never reach the x-axis. By shrinking some coefficients to zero, Lasso regression can also help us to select variables. Therefore, Lasso regression is also better than ridge when we need to select key variables.